You make a cool reusable component, publish it and get it used by M people in N projects. Everything is good and under control.. until a CHANGE HAPPENS. And a few bugs from end-users. And you tweak some internal bits and add another feature.. Unless you have a good versioning practices in place, you'll be in dll-hell and everyone will hate you for making the messy never-working and poorly supported component in the first place.

The following describes a practice I use for versioning reusable components and staying in control (closer to sane).

What do I Need?

The absolute must is to understand from the dll itself which code it was compiled from.

For example if a user sends you email witha question or a problem you want to know what is the EXACT code they used. This is especially important to help ensure you can reproduce the problem they had.

Assembly identity must be unique enough without requiring constant effort from dev.

Thirdly, you want to control when assembly can be upgraded without recompile of caller assemblies. This is especially important when you have a chain of dependent assemblies one of the bottom layer ones get a non-breaking change - there is no need to recompile and re-release every other component in the chain. Even more when fully qualified names are stored in configuration. Obviously this must be used with care, as it is really bad when loader loads only partially compatible assembly and you get a runtime exception for missing a method or similar avoidable error.

Optionally it would be cool to easily see from dll the exact date&time when assembly was created, by whom, where and which compilation flags were used (Debug/Release). This makes the assembly origin even more obvious.

what tools do I have?

Obviously there are attributes to attach metadata to my assembly:

- This is used by the runtime loader to determine which assembly to load

- Show in windows file properties as "File version" but only if there is no FileVersionAttribute value.

- Format: forced 4-part numbered, i.e. '1.0.123.23425'

- ignored by assembly loading

- Some setup tools use it to decide if assembly should be upgraded or "already exists".

- Show in windows file properties as "File version".

- Format: free text but OS recognizes only 4-part numbered format.

- Dumb metadata, show in windows file properties as "Product version".

- Format: free text

- AssemblyConfigurationAttribute and AssemblyDescription

- Dumb metadata, readable only by .net tools.

- Format: free text

Note that while assemblyVersion supports asterisk to autogenerate last 2 components, it does not work for FileAttribute so it is of no use.

Windows explorer properties has Details view which exposes some metadata about dll versions:

What version SCHEME to use?

I suggest every assembly to be identified by 4-part numbered version number:

- <major> - increment on major functional change

- <minor> - increment on every breaking functional change

- <date> - compile timestamp for uniqueness

- <time> - compile timestamp for uniqueness

AssemblyVersion: '<major>.<minor>.0.0'.

FileVersion: '<major>.<minor>.<date>.<time>'

InformationalVersion can contain everything else.

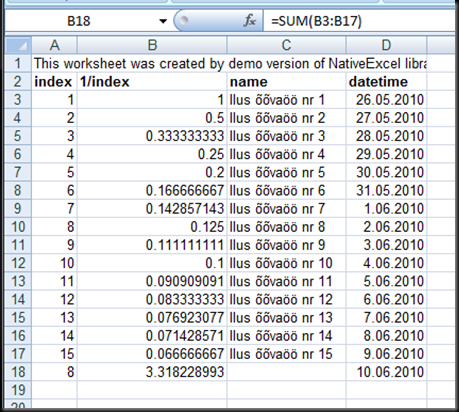

<date> and <time> part should be human-readable and if possible ever-increasing. Since the numbers are limited by UInt16 max value then some compromise is probably necessary.

For example: there may be assembly release 1.2.0.0, deployed as compiled file 1.2.30425.1148, stating that it was released by me on my PC A, today before lunch.

I don't see a real need to have more than 2 parts for AssemblyVersion. For:

- Hotfix (no breaking API change) - change only compile timestamp.

- non-breaking functional change - depending on change consider incrementing <minor> or just treating it as hotfix.

- breaking functional change - increment <minor> or optionally <major>.

I suggest against using AssemblyDescriptionAttribute or configurationAttribute for versioning info as it is more difiicult to browse this info for random dlls.

How to get it automatically?

Obviously AssemblyVersion must be changed manually by devs. Not automatics needed there.

[assembly: AssemblyVersion("1.2")]

On the other hand FileVersion and InformationalVersion should be regenerated on each build. For this I created a T4 template AssemblyInformationalVersion.tt:

<#@ template debug="false" hostspecific="true" language="C#" #> <#@ assembly name="System.Core" #> <#@ import namespace="System" #> <#@ import namespace="System.Linq" #> <#@ import namespace="System.IO" #> <#@ output extension="gen.cs"#> <# string informationalVersion; string assemblyVersion; string fileVersionAddition; try { var path = this.Host.ResolvePath("AssemblyInfo.cs"); string versionLine = File.ReadAllLines(path).Where(line => line.Contains("AssemblyVersion")).First(); assemblyVersion = versionLine.Split('"')[1]; if (assemblyVersion.Split('.').Length != 2) { throw new Exception("AssemblyVersion must be in format '<major>.<minor>'"); } DateTime compileDate = DateTime.Now; int yearpart = compileDate.Year % 10 %6; //last digit mod 6 (max value in version component is 65536) fileVersionAddition = yearpart + compileDate.ToString("MMdd") + "." + compileDate.ToString("HHmm"); informationalVersion = String.Format ("{0} (on {1} by {2} at {3})", assemblyVersion, DateTime.Now.ToString("s"), Environment.UserName, Environment.MachineName ); } catch ( Exception exception) { WriteLine("Error generating output : " + exception.ToString()); throw; } #> using System.Reflection; //NB! this file is automatically generated. All manual changes will be lost on build. // Generated based on assembly version: <#= assemblyVersion #> [assembly: AssemblyFileVersion("<#= assemblyVersion #>.<#= fileVersionAddition #>")] [assembly: AssemblyInformationalVersion("<#= informationalVersion #>")]

.. and trigger it on each build, for example by pre-build event:

"%COMMONPROGRAMFILES(x86)%\microsoft shared\TextTemplating\11.0\TextTransform.exe" -out "$(ProjectDir)\Properties\AssemblyInformationalVersion.gen.cs" "$(ProjectDir)\Properties\AssemblyInformationalVersion.tt"

So, on each build I will have detailed versioning info generated for my assembly automatically:

using System.Reflection; //NB! this file is automatically generated. All manual changes will be lost on build. // Generated based on assembly version: 1.2 [assembly: AssemblyFileVersion("1.2.30415.1128")] [assembly: AssemblyInformationalVersion("1.2 (on 2013-04-15T11:28:42 by MyUser at MYMACHINE)")]

There is a one-time, simple copy-paste setup and then it just works as long as you use Visual Studio for compilation. Not 100% sure if AssemblyInfo.cs file path is resolved by build server hosts.